集群规划

| *IP* | *主机名* | *节点* | *操作系统版本* |

|---|---|---|---|

| 192.168.2.101 | master | Master | CentOS 7.9 |

| 192.168.2.102 | slave1 | Worker | CentOS 7.9 |

| 192.168.2.103 | slave2 | Worker | CentOS 7.9 |

前期准备

分别在三台服务器上面 /etc/hosts添加

192.168.2.101 master

192.168.2.102 slave1

192.168.2.103 slave2

检查各自hostname master slave1 vlave2

在master主机上面生成 id_rsa.pub

在master1上操作

ssh-keygen -t rsa

ssh-copy-id -i .ssh/id_rsa.pub root@slave1

ssh-copy-id -i .ssh/id_rsa.pub root@slave2

实现node上面免密

lscpu 查看cpu核数不少于2core

yum install vim lrzsz net-tools dnf

cd /home/tools/ && vim auto_install_docker.sh

export REGISTRY_MIRROR=https://registry.cn-hangzhou.aliyuncs.com

dnf install yum*

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

dnf install https://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/Packages/containerd.io-1.2.13-3.1.el7.x86_64.rpm

yum install docker-ce docker-ce-cli -y

systemctl enable docker.service

systemctl start docker.service

docker version 或者指定docker版本

export REGISTRY_MIRROR=https://registry.cn-hangzhou.aliyuncs.com

dnf install yum*

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce-19.03.8 docker-ce-cli-19.03.8 containerd.io

systemctl enable docker.service

systemctl start docker.service

docker version 碰到问题:

Public key for docker-ce-24.0.7-1.el7.x86_64.rpm is not installed

Public key for docker-ce-rootless-extras-24.0.7-1.el7.x86_64.rpm is not installed

到官网上面查找到centos对应版本的rpm下载:

https://docs.docker.com/engine/install/centos/

https://download.docker.com/linux/centos/7/x86_64/stable/Packages/

docker-compose-plugin-2.21.0-1.el7.x86_64.rpm

docker-ce-rootless-extras-24.0.7-1.el7.x86_64.rpm等等,

根据提示下载对应的rpm包

在下载的文件夹下面运行:

yum install docker-ce-cli-24.0.7-1.el7.x86_64.rpm docker-ce-24.0.7-1.el7.x86_64.rpm containerd.io-1.6.26-3.1.el7.x86_64.rpm docker-ce-rootless-extras-24.0.7-1.el7.x86_64.rpm docker-compose-plugin-2.21.0-1.el7.x86_64.rpm docker-buildx-plugin-0.11.2-1.el7.x86_64.rpm

直接安装即可

配置阿里云镜像加速器:

mkdir -p /etc/docker

vim /etc/docker/daemon.json

{

“registry-mirrors”: [“https://qicojpi.mirror.aliyuncs.com“]

}

加速地址改为自己的

systemctl daemon-reload

systemctl restart docker

系统设置

安装nfs-utils

yum install -y nfs-utils wget

关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

关闭selinux:

setenforce 0

sed -i “s/SELINUX=enforcing/SELINUX=disabled/g” /etc/selinux/config

关闭swap

swapoff -a

yes | cp /etc/fstab /etc/fstab_bak

cat /etc/fstab_bak |grep -v swap > /etc/fstab

修改 /etc/sysctl.conf

vim sys_config.sh

\# 如果有配置,则修改

sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call-ip6tables=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call-iptables=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.all.disable_ipv6.*#net.ipv6.conf.all.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.default.disable_ipv6.*#net.ipv6.conf.default.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.lo.disable_ipv6.*#net.ipv6.conf.lo.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.all.forwarding.*#net.ipv6.conf.all.forwarding=1#g" /etc/sysctl.conf

\# 可能没有,追加

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.default.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.lo.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.all.forwarding = 1" >> /etc/sysctl.conf

\# 执行命令以应用

sysctl -p chmod a+x ./sys_config.sh && ./sys_config.sh

安装k8s:

配置K8S yum源****:

Vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

安装kubelet、kubeadm、kubectl

yum install -y kubelet-1.18.2 kubeadm-1.18.2 kubectl-1.18.2

修改docker Cgroup Driver为systemd

sed -i “s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// –containerd=/run/containerd/containerd.sock –exec-opt native.cgroupdriver=systemd#g” /usr/lib/systemd/system/docker.service

重启 docker,并启动 kubelet

systemctl daemon-reload

systemctl restart docker

systemctl enable kubelet && systemctl start kubelet

# 卸载旧版本K8S

yum remove -y kubelet kubeadm kubectl

# 安装kubelet、kubeadm、kubectl,这里我安装的是1.18.2版本;

yum install -y kubeadm-1.18.20-0.x86_64 kubectl-1.18.20-0.x86_64 kubelet-1.18.20-0.x86_64

# 修改docker Cgroup Driver为systemd

# # 将/usr/lib/systemd/system/docker.service文件中的这一行 ExecStart=/usr/bin/dockerd -H fd:// –containerd=/run/containerd/containerd.sock

# # 修改为 ExecStart=/usr/bin/dockerd -H fd:// –containerd=/run/containerd/containerd.sock –exec-opt native.cgroupdriver=systemd

# 如果不修改,在添加 worker 节点时可能会碰到如下错误

# [WARNING IsDockerSystemdCheck]: detected “cgroupfs” as the Docker cgroup driver. The recommended driver is “systemd”.

# Please follow the guide at https://kubernetes.io/docs/setup/cri/

sed -i “s#^ExecStart=/usr/bin/dockerd.*#ExecStart=/usr/bin/dockerd -H fd:// –containerd=/run/containerd/containerd.sock –exec-opt native.cgroupdriver=systemd#g” /usr/lib/systemd/system/docker.service

# 设置 docker 镜像,提高 docker 镜像下载速度和稳定性

# 如果您访问 https://hub.docker.io 速度非常稳定,亦可以跳过这个步骤

# curl -sSL https://kuboard.cn/install-script/set_mirror.sh | sh -s ${REGISTRY_MIRROR}

重启 docker,并启动 kubelet

systemctl daemon-reload

systemctl restart docker

systemctl enabledocker

systemctl enable kubelet && systemctl start kubelet

kubelet 启动报错因为没初始化

docker version

初始化Master节点:

初始化k8s(Master)

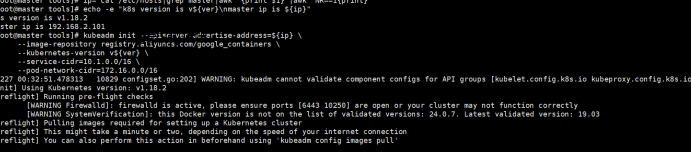

获取k8s版本

ver=`kubeadm version|awk '{print $5}'|sed "s/[^0-9|\.]//g"|awk 'NR==1{print}'`

获取MASTER主机IP

ip=`cat /etc/hosts|grep master|awk '{print $1}'|awk 'NR==1{print}'`

验证信息

echo -e “k8s version is v${ver}\nmaster ip is ${ip}”

[root@master tools]# ver=`kubeadm version|awk '{print $5}'|sed "s/[^0-9|\.]//g"|awk 'NR==1{print}'`

[root@master tools]# ip=`cat /etc/hosts|grep master|awk '{print $1}'|awk 'NR==1{print}'`

[root@master tools]# echo -e “k8s version is v${ver}\nmaster ip is ${ip}”

k8s version is v1.18.2

master ip is 192.168.2.101

[root@master tools]# kubeadm init --apiserver-advertise-address=${ip} \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v${ver} \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=172.16.0.0/16

W1227 00:32:51.478313 10829 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.2

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 24.0.7. Latest validated version: 19.03

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

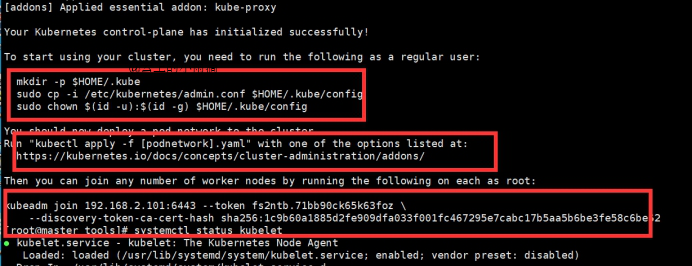

初始化成功,提示有三个关键信息,前面两个直接在master执行:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Kubectl apply -f https://raw.githubusercontent.com/coreos/flannel

/master/Documentation/kube-flannel.yml

[root@master tools]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

第三条执行分别在node节点执行,此指令涉及的uid属于随机性,如过期请在master节点执行下面的命令重新生成

kubeadm token create –print-join-command

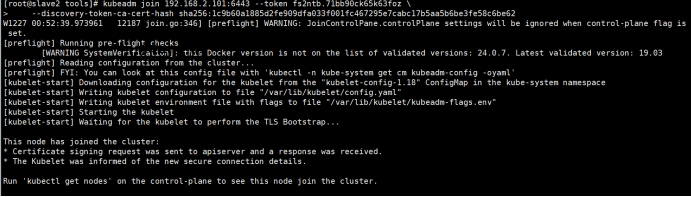

Slave2执行:

Node节点加入并验证:

Master执行:在Master节点上执行如下命令获取join命令参数。

kubeadm token create --print-join-command

kubeadm token create --print-join-command

[root@master ~]# kubeadm token create --print-join-command

W1227 01:24:30.930613 3589 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

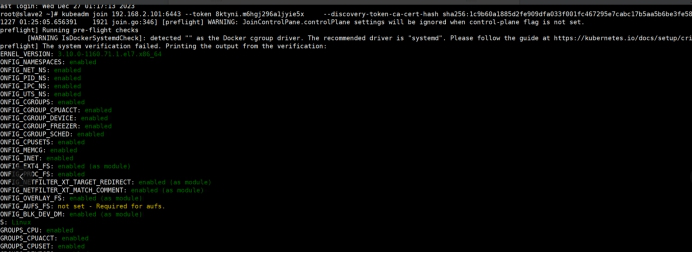

kubeadm join 192.168.2.101:6443 –token 8ktyni.m6hgj296a1jyie5x –discovery-token-ca-cert-hash sha256:1c9b60a1885d2fe909dfa033f001fc467295e7cabc17b5aa5b6be3fe58c6be62

kubeadm join 192.168.2.101:6443 –token 8ktyni.m6hgj296a1jyie5x –discovery-token-ca-cert-hash sha256:1c9b60a1885d2fe909dfa033f001fc467295e7cabc17b5aa5b6be3fe58c6be62

这行代码就是获取到的join命令。

注意:join命令中的token的有效时间为 2 个小时,2小时内,可以使用此 token 初始化任意数量的 worker 节点。

在node节点上面执行:

Master查看一下:

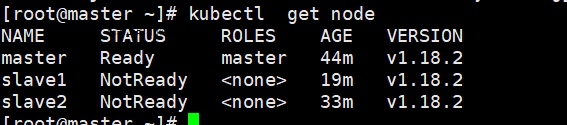

root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready master 44m v1.18.2

slave1 NotReady

slave2 NotReady

可以看到,node节点已经加入了,但是状态属于未准备,并且rule(角色)为空!不要慌,稳住,下面先解决最简单的角色

kubectl label nodes node node-role.kubernetes.io/node=

//nodes接着的“node”属于节点主机名;

//最后一个node属于角色属性(另外还可以设置的角色为master)

验证一下!

kubectl label nodes slave1 node-role.kubernetes.io/node=

kubectl label nodes slave2 node-role.kubernetes.io/node=

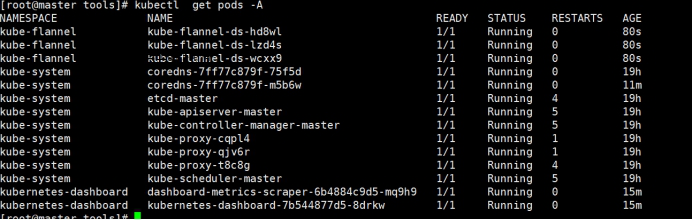

查看Master节点的初始化结果****:

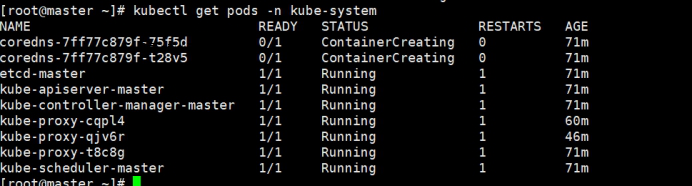

确保所有容器组处于Running状态

查看 Master 节点初始化结果

kubectl get nodes -o wide

kubectl get pods -n kube-system

kube-scheduler-master 1/1 Running 1 71m

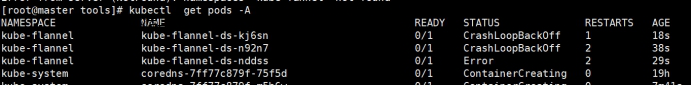

kubectl logs -f -n kube-fannel kube-flannel-ds-kj6sn

E1227 12:20:41.167333 1 main.go:332] Error registering network: failed to acquire lease: subnet “10.244.0.0/16” specified in the flannel net config doesn’t contain “172.16.0.0/24” PodCIDR of the “master” node

网段错了,下载kube-flannel.yml 没调整网段。。修改网段

net-conf.json: |

{

“Network”: “172.16.0.0/16”,

“Backend”: {

“Type”: “vxlan”

}

}

kubectl delete -f kube-flannel.yml

kubectl apply -f kube-flannel.yml

kubectl get pods -A